Wii GPU is similar to ATI Rv610

Posted by tapionvslink

|

Wii GPU is similar to ATI Rv610 March 12, 2010 09:17PM | Registered: 14 years ago Posts: 5 |

The Wii Hollywood is capable of GPGPU natively and can be comparable to an ATI R6v610 since it can do HDR+AA in

games like Monster Hunter 3, Resident Evil DarkSide Chronicles, Silent Hill, Cursed Mountain, etc; HDR+AA was not

possible even in the Nvidia 7 series since HDR required a lot of power and coupling it with AA(antialaising) was out of question

until the new architecture that came in the ATI x1000 family showed that it was possible even though these cards

only had 16 pipelines against the 24 pipelines of the Nvidia 7 series. The ATI x1000 were also the first GPU capable

of GPGPU natively.

ATI Radeon x1000 and Nvidia 8800 first gpu´s capable of gpgpu natively.-

according to this report, gpgpu is not feasible in ATI Radeons below the ati x1000 series(R520 and R580)

[news.softpedia.com]

"

Among the GPGPU capable ATI and Nvidia chips there are those of the latter generation like the GeForce 8800 and

Radeon X1000 and HD 2000s, but older chips can too be used but as they are slower don't hold your breath waiting for

results. Unlike the processors that are capable only of a limited degree of parallelism, being able to execute a few

SIMD instructions per clock cycle, the latest graphics chips can process hundreds. This feature is hardware

implemented in all newer video chips and it is related to the fact that such a chip is made up by many identical

processing units like the pixel shading units. For example, ATI's Radeon X1900 chip has 48 such units, while the

GeForce 8800 numbers 128, so their parallel processing capabilities are very high.

"

ATI Radeon x1000 family HDR+Antialaising

[www.xbitlabs.com]

"

HDR: Speed AND Quality

The new generation of ATI’s graphics processors fully supports high dynamic range display modes, known under the

common name HDR.

One HDR mode was already available in RADEON X800 family processors, but game developers didn’t appreciate that

feature much. We also described HDR in detail in our review of the NV40 processor which supported the OpenEXR

standard with 16-bit floating point color representation developed by Industrial Light & Magic (for details see our

article called NVIDIA GeForce 6800 Ultra and GeForce 6800: NV40 Enters the Scene ).

OpenEXR was chosen as a standard widely employed in the cinema industry to create special effects in movies, but PC

game developers remained rather indifferent. The 3D shooter Far Cry long remained the only game to support OpenEXR

and even this game suffered a tremendous performance hit in the HDR mode. Resolutions above 1024x768 were absolutely

unplayable. Moreover, the specifics of the implementation of HDR in NVIDIA’s graphics architecture made it

impossible to use full-screen antialiasing in this mode (on the other hand, FSAA would just result in an even bigger

performance hit). The later released GeForce 7800 GTX, however, had enough speed to allow using OpenEXR with some

comfort, but it still didn’t permit to combine it with FSAA.

ATI Technologies took its previous experience into account when developing the new architecture and the RADEON X1000

acquired widest HDR-related capabilities, with various – and even custom – formats. The RADEON X1000 GPUs also

allows you to use HDR along with full-screen antialiasing. This is of course a big step forward since the NVIDIA

GeForce 6/7, but do the new GPUs have enough performance to ensure a comfortable speed in the new HDR modes? We’ll

only know this after we test them, but at least we know now why the R520 chip, the senior model in ATI’s new GPU

series, came out more complex than the NVIDIA G70. The above-described architectural innovations each required its

own portion of transistors in the die. As a result, the R520 consists of 320 million transistors – the most complex

graphics processor today! – although it has 16 pixel pipelines against the G70’s 24.

"

ATI R520(gpu found in the ATI Radeon x1000 series) first GPU that supported HDR+AA

[en.wikipedia.org]

"

ATI's DirectX 9.0c series of graphics cards, with complete Shader Model 3.0 support. Launched in October 2005, this

series brought a number of enhancements including the floating point render target technology necessary for HDR

rendering with anti-aliasing. Cards released include X1300 - X1950.

"

The ATI Hollywood was finished later than June 2006, when NEC announced that would provide the EDRAM to Wii with

colaboration of Mosys.

Monster Hunter 3 of Wii shows us HDR+AA

[news.vgchartz.com]

"

Monster Hunter 3 demo impressions

By Nick Roussounelos 14th Jun 2009

Nintendo, 3,525 views

We tried out the Monster Hunter 3 demo bundled with the Wii version of Monster Hunter G. Quest Complete!

Monster Hunter 3 (~Tri) Preview

Upon the series debut on PS2 back in 2004 the public reaction to the Monster Hunter series was, at best, mediocre.

Someone, who is unquestionably very happy now, proposed porting Monster Hunter to Sony's portable wonder and here we

are, with MH2G climbining to 3 million and counting! Now, let's take a sneak peek at how things fare for the next

iteration of the franchise, Monster Hunter 3, developed from the ground up on Wii, through the free demo bundled

with the system's own version of Monster Hunter G which was recently released. Before we start, let me make it clear

that I don't speak Japanese, so don't ask for any story coverage.

The demo consists of two quests, hunt either a Kurubekko (little wyvern much like Kut-Ku) or an alpha carnivore,

Dosjagi. You can choose between 5 weapons: Great Sword, Sword & Shield, Hammer, Light Crossbow and Heavy Crossbow.

Make your selections and off you go! The first thing you'll notice is the spectacular graphics; no, that's no

hyperbole. The lighting, the texture detail, anti-aliasing (yes, you read that right, no jaggies), HDR (High dynamic

range rendering for us geeks) and other technologies make this one of the prettiest Wii games on the market, period.

PSP Monster Hunter games looked phenomenal on the platform and pushed the hardware beyond what was thought possible,

Capcom made no compromises this time either. The game also features a great musical score, which is much more epic

in scope than previous iterations. Loading times between each areas take 1-2 seconds tops, nothing disturbing, but

not very pleasant either.

"

There are also other games in Wii that have shown HDR+AA like Cursed Mountain, Resident Evil DarkSide Chronicles,

Silent Hill, and seems that Xenoblade will also too. On purpose, you should also see games like Zangeki no

Reginleiv, whatch gametrailers.com. There is also something you should know, HDR cannot be done without progammable

shadders.

I have also read that HDR is not possible without programmable shaders.-

[courses.csusm.edu]

"

OpenGL fixed - functions cannot be used for HDR.

The programmable pipeline must be used to achieve HDR lighting effects.

HDR is achieved in OpenGL using a fragment shader, written in GLSL or Cg.

"

On purpose, Zelda Twilight did not use HDR, that was a simulating HDR, just compare Zelda to Monster Hunter 3 images and

you will see the difference without difficulty.

I am sure that must developers that say that Wii has no programmable shaders just say that becasue they know Directx

Shader Model, but not OpenGL Shading Language, since nintendo makes use of an API called GX which is very similar to

Opengl, and there have been also succesful attempts of using wrappers like the mesa(opengl) driver.

[www.phoronix.com]

"

A Mesa (OpenGL) Driver For The Nintendo Wii?

Posted by Michael Larabel on January 28, 2009

There is now talk on the Mesa 3D development list about the possibility of having a Mesa driver for the Nintendo

Wii. Those working on developing custom games for this console platform have already experienced some success in

bringing OpenGL to the Wii through the use of Mesa.

Nintendo has its own graphics API (GX) for the Wii, which is resemblant of OpenGL but still different enough that

some work is required to get OpenGL running. A way to handle this though is by having a Mesa driver translate the

OpenGL calls into the Wii's GX API. This is similar to gl2gx, which is an open-source project that serves as a

software wrapper for the Nintendo Wii and GameCube.

Their first OpenGL instructions running on the Nintendo Wii is shown below (it's not much, but at least it's better

than seeing glxgears

[www.phoronix.net]

"

Or perhaps could be because they are making use of the first SDK´s that came out even before the Hollywood´s design

was finished.

There are many engines that support programmable shader for Wii like:

Unity for Wii.- [unity3d.com]

"

Scriptable Shaders

Unity's ShaderLab system has been expanded to unlock the full power of The Wii console's graphics chip. Use one of

the built-in Wii-optimized shaders or write your own. Script and modify at runtime any shader on any objects in any

way you like.

"

Gamebryo LighSpeed for Wii.-

[www.emergent.net]

Gamebryo 2.6 Features:

Full tools capabilities for Nintendo Wii:

Wii Terrain: Emergent Terrain System is now part of Wii, with base terrain/shader support, port of terrain samples

and detail maps in shader.

Display List from tools reduces memory requirements

Improved resource utilization via VAT

Copy / Fast Copy

Mesh profiles

Floodgate – improves performance and reduces risk when porting from multi-core platforms.

Reduces MEM1 memory requirements

Saves memory with exports that use smaller data types

"

AThena engine for Wii(used in Cursed Mountain).- [wii.ign.com]

"

To deliver on these goals, the Sproing team relies on its proprietary "Athena" game engine, which is rendering the

Himalayas on Wii at a quality never seen before. The engine highlights of "Athena" include amongst others,

HDR-Rendering, shader simulations developed especially for Wii in order to display ice, heat and water (realistic

reflections and refractions), an ultra-fast particle system for amazing snow storms, soft particles for realistic

fog and smoke, depth of field, motion blur, dynamic soft shadows, spherical harmonics lighting, as well as a high

performance level-of-detail and streaming system in order to provide long viewing distance of the entire

surrounding. In order to create an exciting atmosphere when battling the ghosts, the game employs a number of

custom-created special effects such as the shader simulations as well as a newly developed post-processing

framework. "Our engine technology really takes the Wii hardware to its limits and Wii gamers can really look forward

to a heart-stopping, and breath-taking world that comes alive with this title," said Gerhard Seiler, Technical

Director of Sproing.

"

Shark 3D for Wii&trade(read about the Re-entrant Modular Shader System™).-

[www.spinor.com]

"

4. Empower your game designer: Live editing

One of Shark 3D’s core features is the tool pipeline, which supports live editing from the bottom up.

Edit your game items and NPCs in the Shark 3D Wii game editor,

edit your game scripts,

edit your textures in Photoshop,

edit your animations and geometry in Max or Maya,

edit your rendering effects in the Shark 3D Wii shader editor, ...

... and see and test all your changes live not only on your PC, but also on your Wii development kit!

Furthermore, supported by Shark 3D's live-live™ editing, developers can easily modify and customize the tool

pipeline for their particular needs. This makes game production for Wii more efficient and predictable,

significantly reducing production times and risks.

5. Empower your artists: Lighting, shadowing, reflections on Wii - easier than ever!

The node based shader editor empowers your designers to create their own Wii shader graphs.

Your designers can easily create, combine and customize lighting, shadowing, reflections, shader animations, color

operations, and many other effects.

Internally, the shader editor automatically compiles the Wii shader graphs into assemblings of Shark 3D renderer

modules and pipeline stages, powered by the Re-entrant Modular Shader

System™(http://www.spinor.com/?select=tech/render#modular_shader_system). Of course, the designers can edit the

shaders interactively both on the Wii and on PC. This is especially useful when fine tuning the look on the Wii.

8. Many useful run-time features included

Run-time features of Shark 3D for Wii include the following:

Rendering

Efficient rendering: Off-line generation and Wii specific optimization of Wii display lists and Wii vertex data.

Efficient skinning: Off-line assembling of skinned meshes specifically for the Wii. At run-time, the CPU does not

touch any vertex, index or polygon data anymore.

Wii specific off-line conversion of textures for optimal performance and minimal memory usage.

A multi-layer animation system.

A character walking animation system.

Efficient culling of static and movable objects for rendering.

A shader system providing access to Wii specific render state settings.

A particle system.

Dynamic local lights.

Lighting management respecting Wii specific aspects.

GUI rendering.

TrueType Unicode text rendering.

Smart management of object state dependencies using delayed evaluation.

Smart attachment point management.

Multiple viewports with separate culling for multiplayer games.

And many other features.

Sound

Optimized Wii specific sound formats.

Run-time support for compressed Ogg Vorbis files.

Efficient culling of static and movable sound sources placed in the level.

Streaming sound (e.g. background music) from disk.

Wii specific off-line optimization and conversion of sounds for optimal performance and minimal memory usage.

Various other sound engine features.

Physics

Collision detection.

Physics.

Automatically generated sound for physics collisions and friction.

Automatic merging of nearby contact sounds.

Various constraints.

Generic path-based motors.

Various other physics features.

Game engine

Triggers and sensors.

Scripting.

Level management.

Many other game engine features.

Streaming

Background loading of levels without halting the rendering loop.

Background loading of levels parts and asset groups without halting the rendering loop.

Resource and memory management

Pack files.

Optimized low-level memory management.

Optimized for avoiding implicit memory allocations while a level is running. This avoids unexpected out-of-memory

errors while a level is running.

Many other features.

"

Another proof that ATI Hollywood was designed for GPGPU is Havok FX

Wii Havok FX

[www.gamasutra.com]

"

Product: Havok Supports Wii, Next-Gen At E3

by Jason Dobson

0 comments Share

May 15, 2006

During last week's E3 event in Los Angeles, cross-platform middleware physics solution provider Havok announced

support for the Wii, Nintendo's upcoming next-generation video game console platform.

The GameCube's current library of software titles feature more than fifteen games that utilize Havok middleware, and

this announcement confirms the continued support of Nintendo by Havok.

"Havok has become synonymous with state-of-the-art physics in games in recent years," said Ramin Ravanpey, Director

of Software Development Support, Nintendo of America. "With this announcement from Havok, we feel Wii developers

have another critical tool in their hands that helps unleash the real magic of the Wii platform."

In addition, Havok's software solutions were featured in 35 titles from 25 different developers at E3 across

multiple platforms, including Alan Wake, Alone in the Dark, Assassin, Auto Assault, BioShock, Brothers in Arms

Hell's Highway, Cars, Company of Heroes, Crackdown, Dawn of Mana, Dead Rising, Destroy All Humans! 2, F.E.A.R.,

Ghost Recon Advanced Warfighter, Happy Feet, Heavenly Sword, Hellgate: London, Just Cause, Killzone, Lost Planet:

Extreme Condition, Metal of Honor Allied Assault, Motor Storm, NBA Live 07, Over the Hedge, Saint's Row, Shadowrun,

Sonic The Hedgehog, Splinter Cell 4, Spore, Stranglehold, Superman Returns: The Videogame, Test Drive Unlimited, The

Ant Bully, The Godfather, and Urban Chaos: Riot Response.

"We wanted the action in our game to focus on interactive elements in a highly intuitive manner," says David Nadal,

Game Director at Eden Games. "We knew Havok Physics could help us do that for game-play elements, but we wanted to

push the envelope even further to add persistent effects that could interact with game-play elements. Havok's

GPU-accelerated physics effects middleware helped us achieve that in surprisingly little time."

Through the use of Havok FX and GPU technology, game developers are able to implement a range of physical effects

like debris, smoke, and fluids that add detail and believability to Havok’s physics system. Havok FX is

cross-platform, takes advantage of current and next-generation GPU technology, and utilizes the native power of

Shader Model 3 class graphics cards to deliver effect physics that integrate seamlessly with Havok’s physics

technology found in Havok Complete.

“With Havok FX we can explore new types of visual effects that add realism into Hellgate: London,” commented Tyler

Thompson, Technical Director, Flagship Studios. “Given the widespread installed base of GPUs and the incredible

performance of the new Nvidia GeForce 7900 boards, Havok FX was a natural choice."

"

And as you may know, Havok FX is designed only for physics in the gpu, while Havok Hydracore is the one designed for

cpu multicore.

Still doubts that Havok FX has relation with gpgpu, don´t worry.

[www.firingsquad.com]

"

..

FiringSquad: One of Havok's competitors' , AGEIA, has said of the ATI-Havok FX hardware set up, "Graphics processors

are designed for graphics. Physics is an entirely different environment. Why would you sacrifice graphics

performance for questionable physics? You’ll be hard pressed to find game developers who don’t want to use all the

graphics power they can get, thus leaving very little for anything else in that chip. " What is Havok's response to

this?

Jeff Yates: Well, I’m sure the AGEIA folks have heard about General Purpose GPU or “GP-GPU” initiatives that have

been around for years. The evolution of the GPU and the programmable shader technology that drives it have been

leading to this moment for quite some time. From our perspective, the time has arrived, and things are never going

to go backwards. So, if people are going to purchase extra hardware to do physics, why not purchase an extra GPU, or

better yet relegate last year’s GPU to physics, and get a brand new GPU for rendering? The fact is that this is not

stealing from the graphics – rather it gives the option of providing more horsepower to the graphics, or the

physics, or both – depending on what a particular game needs. I fail to see how that’s a bad thing. Not to mention

that downward pricing for “last year’s” GPUs are already feeding the market with physics-capable GPUs at the sub

$200 price point –even reaching the magic $100 price point.

FiringSquad: Finally is there anything else you want to say at this time about Havok and its plans?

Jeff Yates: Well we have so much good stuff coming – Havok FX is very exciting, but we are also preparing to launch

our latest character behavior tool and sdk: Havok Behavior. This is really the next step forward for blending

physics, animation, and behaviors, all with a configurable tool set that supports direct export and editing of

content from 3ds max, Maya, and XSI. And I forgot to mention next generation optimizations and Nintendo Wii support.

It is a lot, but it is all part of our big 4.0 release coming this summer. It is definitively an exciting time for

us.

"

Nintendo may not make use of shader model 3 or superior for Wii, since is a propiestary of Windows, but they can use

something like the shading language of opengl, for example, shading language version 1.3 = shader model 4.0.

[www.opengl.org]

I can compare the ATI Hollywood between an ATI Rv610 and an Rv630 since Hollywood, besides being capable of HDR+AA

like the ATI R520, according to one of the displacement mapping patents of nintendo(valid until 2020) says that the

GPU vertex cache and is able of doing fetching vertex texture, something available until the ATI HD 2000 series(ATI

R600 GPU´s) came up and those features are necessary for vertex displacement mapping, which does almost all the work

in the GPU for the displacement leaving the CPU to just provide the original position of the vertices as work(which

is nothin); in few words, this technique makes use of GPGPU algorithms, plus, ATI R600 gpu´s also were the first to

introduce a command processor.

In it I read something about fetching vertex and vertex cache, something that was available until the ATI´s HD 2000

series(ATI R600) for doing vertex displacement mapping.

Patent Storm

[www.patentstorm.us]

"

US Patent 6980218 - Method and apparatus for efficient generation of texture coordinate displacements for

implementing emboss-style bump mapping in a graphics rendering system

US Patent Issued on December 27, 2005

Estimated Patent Expiration Date: November 28, 2020

Estimated Expiration Date is calculated based on simple USPTO term provisions. It does not account for terminal

disclaimers, term adjustments, failure to pay maintenance fees, or other factors which might affect the term of a

patent.

Command processor 200 receives display commands from main processor 110 and parses them—obtaining any additional

data necessary to process them from shared memory 112. The command processor 200 provides a stream of vertex

commands to graphics pipeline 180 for 2D and/or 3D processing and rendering. Graphics pipeline 180 generates images

based on these commands. The resulting image information may be transferred to main memory 112 for access by display

controller/video interface unit 164—which displays the frame buffer output of pipeline 180 on display 56.

FIG. 5 is a logical flow diagram of graphics processor 154. Main processor 110 may store graphics command streams

210, display lists 212 and vertex arrays 214 in main memory 112, and pass pointers to command processor 200 via bus

interface 150. The main processor 110 stores graphics commands in one or more graphics first-in-first-out (FIFO)

buffers 210 it allocates in main memory 110. The command processor 200 fetches: command streams from main memory 112

via an on-chip FIFO memory buffer 216 that receives and buffers the graphics commands for synchronization/flow

control and load balancing, display lists 212 from main memory 112 via an on-chip call FIFO memory buffer 218, and

vertex attributes from the command stream and/or from vertex arrays 214 in main memory 112 via a vertex cache 220.

Command processor 200 performs command processing operations 200a that convert attribute types to floating point

format, and pass the resulting complete vertex polygon data to graphics pipeline 180 for rendering/rasterization. A

programmable memory arbitration circuitry 130 (see FIG. 4) arbitrates access to shared main memory 112 between

graphics pipeline 180, command processor 200 and display controller/video interface unit 164.

FIG. 4 shows that graphics pipeline 180 may include: a transform unit 300, a setup/rasterizer 400, a texture unit

500, a texture environment unit 600, and a pixel engine 700.

Transform unit 300 performs a variety of 2D and 3D transform and other operations 300a (see FIG. 5). Transform unit

300 may include one or more matrix memories 300b for storing matrices used in transformation processing 300a.

Transform unit 300 transforms incoming geometry per vertex from object space to screen space; and transforms

incoming texture coordinates and computes projective texture coordinates (300c). Transform unit 300 may also perform

polygon clipping/culling (300d). Lighting processing 300e also performed by transform unit 300b provides per vertex

lighting computations for up to eight independent lights in one example embodiment. As discussed herein in greater

detail, Transform unit 300 also performs texture coordinate generation (300c) for emboss-style bump mapping effects.

Setup/rasterizer 400 includes a setup unit which receives vertex data from transform unit 300 and sends triangle

setup information to one or more rasterizer units (400b) performing edge rasterization, texture coordinate

rasterization and color rasterization.

Texture unit 500 (which may include an on-chip texture memory (TMEM) 502) performs various tasks related to

texturing including for example: retrieving textures 504 from main memory 112, texture processing (500a) including,

for example, multi-texture handling, post-cache texture decompression, texture filtering, embossing, shadows and

lighting through the use of projective textures, and BLIT with alpha transparency and depth, bump map processing for

computing texture coordinate displacements for bump mapping, pseudo texture and texture tiling effects (500b), and

indirect texture processing (500c).

"

Plus, ATI Rv610 consumes very low power consumption, about from 25 to 36 watts, while the wii consumes around 53

watts according to the power supply of the Wii, plus, the GPU of the wii was is said to have costed around $30

dollars in cost production(this is not a launch price for cossumers), while an ATI Radeon HD 2400

pro(GPU+memory+etc) was released at a price aroun $59 dollars.

Wii GPU cost production $29.60.-

[www.reghardware.co.uk]

"

Nintendo said to profit on Wii production

Consoles costs under $160 to manufacture?

By Tony Smith • Get more from this author

18th December 2006 10:50 GMT

Nintendo may well be making so much profit on its Wii console it can well afford to replace broken Remote straps. According to a Japanese publication's assessment of the machine's innards, the console costs the videogames company less than $160 to assemble.

In a report published by Japanese business weekly the Toyo Keizai and relayed by Japanese-language site WiiInside, the most expensive component inside the Wii is the DVD drive, which, the paper estimates, costs $31. Next comes ATI's 'Hollywood' graphics chip, at $29.60. The IBM-designed and made CPU, 'Broadway', costs $13, the report reckons.

Add these items to the other components, roll in an assembly cost of $19.50 and you get a manufacturing cost of $158.30. The Toyo Keizai estimates that Nintendo's wholesale price is a cent less than $196. The consoles costs the consumer - if he or she can find one available to buy right now, of course - $250.

So, if the Toyo Keizai is right, Nintendo's making the best part of $40 for every console it sells, and by most accounts it's sold well over a million of them worldwide.

Don't forget, though, it has to cover the development cost, the money spent creating the Wii's on-board software, and the physical distribution and marketing costs, but it nonetheless establishes a nice pattern for Nintendo, which does very nicely out of games sales, whether Wii-specific titles or older ones made for previous consoles that the company can now sell again as downloads. ®

"

ATI Radeon HD 2400 pro price.-

[www.phoronix.com]

"

The Radeon HD 2400PRO has a stock operating frequency of 525MHz for its core and 400MHz for its video memory while

the Radeon HD 2400XT has its core running at 700MHz with the memory doubled at 800MHz. The prices for the low-end

2400PRO and 2400XT are $59 and $79 USD respectively. These ATI/AMD cards are of course competition for the NVIDIA

GeForce 8500GT.

"

ATI Radeon HD 2400 pro power consumption.-

[www.dailytech.com]

"

At the bottom of the new ATI Radeon 2000-series lineup is the ATI Radeon HD 2400 series with PRO and XT models. The

new ATI Radeon HD 2400 features 40 stream processors with four texture units and render backends. GPU clocks vary

between 525 MHz to 700 MHz.

The ATI Radeon HD 2400 series features less than half the transistors as the HD 2600 – 180 million. AMD has the ATI

Radeon HD 2400 series manufactured on the same 65nm process as the HD 2600. Power consumption of the ATI Radeon HD

2400 hovers around 25 watts.

"

A, and i also forgot, the ATI Rv610 has a die size of only 82mm2

[www.beyond3d.com]

while we know that ATI Hollywood has a die size of 94.5mm2(vegas) and 72mm2(napa). I have made some calculations

of the possible die size of the Wii GPU, taking into account things like the dsp, usb controllers, memory, and

stuff. I will save the calculations about the Wii memory and wii gpu die size for last, but for now I will tell you

that the Wii gpu may have at least like 80mm2, that of course taking into account that the 24MBytes of eDRAM are in

the napa die+ DSP, and the GPU and the 3MBytes of eDRAM are in vegas.

And on purpose, the ATI Radeon R600 series were also the first ones that introduced a command processor, which is

like a real processor; it was introduced in order to improve even more the gpgpu capabilities.

[www.tomshardware.com]

"

Command Processor (CP)

9:46 AM - May 14, 2007 by D. Polkowski

According to ATI, the command processor (CP) for the R600 series is a full processor. Not that it is an x86

processor but it is a full microcode processor. It has full access to memory, can perform math computations and can

do its own thinking. Theoretically, this means that the command processor could offload a work from the driver as it

can download microcode to execute real types of instructions.

The CP parses the command stream sent by the driver and does some of the thinking associated with the command

stream. It then synchronizes some of the elements inside of the chip and even validates some of the command stream.

Part of the pipeline was designed to do some of the validation, such as understanding what rendering mode state it

is currently in.

"The whole chip was designed to alleviate all of this work from the driver," Demers said. "In the past the drivers

have (even on the 500 series) typically checked and went 'okay, the program is asking for all of these things to be

set up and some of them are conflicting.'"

If there is a conflict for resources and states, the driver can turn off and think about what to do next. What you

need to remember is that the driver is running on the CPU. The inherent problem with the driver thinking is that it

is stealing cycles from the CPU, which could be doing other more valuable things. ATI claims to have moved almost

all of that work - or, more importantly - all of the validation work, down into the hardware.

The command processor does a lot of this work and the chip is "self aware." The architecture allows it to snoop

around to check what the other parts of the hardware are up to. An example of this is when the Z buffer checks in on

how the pixel shader is doing. It is looking to see if it can kill pixels early. If a resource knows what is

happening, it can switch to a mode that is the most compatible to accomplish its tasks.

Improvements utilizing this type of behavior can explain why consoles are faster in certain applications than on the

PC. PCs are always checking the state of the application. There is a lot of overhead associated with this. If the

application asks for a draw command to be sent with an associated state, the Microsoft runtime will check some of it

and the driver will check some of it. It then has to validate everything and finally send it down to the hardware.

ATI feels that this overhead can be so significant that it moved as much as it could into the hardware.

This is what is generally referred to as the small batch problem. Microsoft's David Blythe commented that

miscommunication over application requirements, differing processing styles and mismatches between the API and the

hardware were the largest complaints from developers. He concluded that his analysis "failed to show any significant

advantage in retaining fine grain changes on the remaining state, so we collected the fine grain state into larger,

related, immutable aggregates called state objects. This has the advantage of establishing an unambiguous model for

which pieces of state should and should not be independent, and reducing the number of API calls required to

substantially reconfigure the pipeline. This model provides a better match for the way we have observed applications

using the API." Lowering the number draw calls, setting of constants and other commands has made DX10 gain back this

overhead.

Further reductions from hardware advances can also add up to an additional reduction in driver overhead in the CPU.

This can be "as much as 30%" says ATI. That does not mean applications will run 30% faster, it simply means that the

typical overhead of a driver in the CPU will be decreased. Depending on the application, the CPU utilization can be

as low as 1% or up to 10-15%. On average this should be only 5-7% but a 30% reduction would translate into a few %

load change off the CPU. While this is not the Holy Grail for higher frame rates, it is a step in the right

direction. There should be a benefit for current DX9 applications and DX10 was designed to be friendly from a small

batch standpoint so it should have more benefits than DX9 in terms of CPU load reduction.

Next page.- [www.tomshardware.com]

[img.tomshardware.com]

The Setup Engine is located between the command processor and the ultra-threaded dispatch processor. Notice the

programmable tessellator as part of the setup engine?

Characteristically, ATI has had a typical primitive assembly setup / scan converter

because it had a separate vertex shader. You could consider the area before the vertex shader as the setup engine.

ATI now only has one shader waiting for all of the different data streams coming in. For this reason, everything

between the command processor and the shader core is the shader setup engine.

This engine will do at least three kinds of processing in order to get everything ready for the shaders. Once

finished, it will send it into the shader.

Vertex:

It will do all of the vertex assembly, tessellation, addresses of the vertices to be fetched, gets the indices and

does some math associated with it. The shader fetches vertices with the data that was computed by the setup unit and

then send it down for all of the vertex processing.

Geometry:

It is the same way with geometry. It will fetch all of the near-neighbor information and primitive information.

There is a lot of addressing computation done before sending it down to the shader for data computation.

Pixel:

This still does the classic pixel setup. That means getting ready for and the actual acts of scan conversion

(rasterization), generating the pixel data which will be sent to the shading through the interpolators. After that

the shaders (programs) will execute on the pixel shader.

next page.- Ultra-Threaded Dispatch Processor

next page.- Sequencing.. bla bla bla and -- A CPU can only process a few threads at a time, while graphics

processors can simultaneously accommodate thousands of threads. ATI has its workload set to 16 pixels per thread.

When you multiply that by the number of threads, it means that at any given moment there can be tens of thousands of

pixels in the shaders. With an abundance of work in a parallel manner, there should be no worries about masking

latency. That is the art of parallel processing and why GPUs more easily hit their theoretical numbers, compared to

CPUs.

next page and it continues

"

As you can see, the command processor in the Wii Hollywood would be the Starlet or the ARM9 as you prefer to call it.

best wii graphical games:

Zangeki no Reginleiv

Cursed Mountain

Dead Space Extraction

Resident Evil SarkSide Chronicles

Silent Hill Shattered Memories

Endless Ocean 2

GTI Club Super Mini Fiesta

etc.

If you wonder why if Wii has a very capable gpu then why it hasn´t been used prpoerly at least for doing vertex

displacment mapping?, that answer can be explained by Tim Swenney of Epic Games

Tim Sweeney: GPGPU Too Costly to Develop3:11 PM - August 14, 2009 by Kevin Parrish - source: Tom's Hardware US

[www.tomshardware.com]

"

Epic Games' chief executive officer Tim Sweeney recently spoke during the keynote presentation of the High

Performance Graphics 2009 conference, saying that it is "dramatically" more expensive for developers to create

software that relies on GPGPU (general purpose computing on graphics processing units) than those programs created

for CPUs.

He thus provides an example, saying that it costs "X" amount of money to develop an efficient single-threaded

algorithm for CPUs. To develop a multithreaded version, it will cost double the amount; three times the amount to

develop for the Cell/PlayStation 3, and a whopping ten times the amount for a current GPGPU version. He said that

developing anything over 2X is simply "uneconomic" for most software companies. To harness today's technology,

companies must lengthen development time and dump more money into the project, two factors that no company can

currently afford.

But according to X-bit Labs, Sweeney spent most of his speech preaching about the death of GPUs (graphics processing

units) in general, or at least in a sense as we know them today. This isn't the first time he predicted the

technology's demise: he offered his predictions of doom last year in this interview. Basically, the days of DirectX

and OpenGL are coming to a close.

“In the next generation we’ll write 100-percent of our rendering code in a real programming language--not DirectX,

not OpenGL, but a language like C++ or CUDA," he said last year. "A real programming language unconstrained by weird

API restrictions. Whether that runs on Nvidia hardware, Intel hardware or ATI hardware is really an independent

question. You could potentially run it on any hardware that's capable of running general-purpose code efficiently."

"

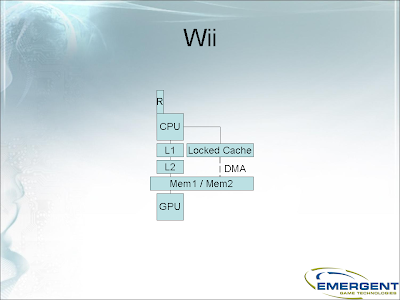

The best engine I know for Wii is the Gamebryo LighSpeed, since it includes Floodgate, which is an stream processing

tool like cuda or opencl, which can be used to unlock wii gpu true power, maybe even for vertex displacement

mapping; floodagte can run either in the cpu cores or in the GPU stream processors, vertex processors, etc, in the

case of the GPU, we must remember that gpgpu is applicable starting from ATI R520 technology and in Nvidia 8800.

[www.emergent.net]

"

A Real Wii Game Engine

Emergent was one of the first middleware vendors to support the Wii. Gamebryo makes it easy to build for multiple

and diverse hardware targets. Even if you're simultaneously developing for single-core and multi-core environments,

Gamebryo takes care of the heavy lifting, and you won't sacrifice power or control.

Gamebryo 2.6 establishes full tools capabilities for the Wii, including availability of the Terrain Builder and

Run-Time for this platform for the first time. Additional updates for Wii designers and programmers include:

Extended support for additional Wii texture formats

More efficient exports from artists’ tools through smaller data types that require less memory

Reduced MEM1 requirements for improved resource utilization

Highly optimized Floodgate™ stream processing tailored for the Wii

Gamebryo Leverages the Wii's Unique Architecture

Gamebryo for the Wii includes optimizations to the exporter pipeline, input mechanisms and rendering pipeline to

ensure that you get the most out of this popular console. Gamebryo also supports the Wii Remote™ controller, and

other standard Gamebryo features are mapped to the unique capabilities of this platform.

Cross-Platform Game Development With the Wii

Emergent’s Floodgate™ stream processing enginehas been made Wii-aware for cross-platform game development projects:

execution is now automatically optimized using the locked cache functionality, which reduces risk when porting

multiprocessor code. Projects created with Gamebryo for other platforms can now be easily re-targeted to the Wii.

"

The wii will be in the market for about 5 or even more than 8 years accordin to Satoru Iwata(he said that in 2009) since they wont launch

a new hardware while the current one can still be squeezed and until the current hardware starts to slow down is

when they will launch a new system.

Another proof that Wii hollywood was designed for GPGPU in mind is that it has been making use of Havok FX for

physics in the GPU.

[www.gamasutra.com]

If you still think that the Hollywood has a fixed pipeline and doesnt have shader support you should see this

thread:

[boards.ign.com]

Wii has an api called GX, resemblant to opengl not directx, you have to make use of something similar to the Shading

language, not shader model.

[www.levelup.com]

---OK, here goes the part that clculates the Wii Hollywood die size, as well as the memory size.

First af all read this article.- [gear.ign.com]

This article was made in June of 2006, a while later when ign reported the specs of Wii basing everything in the

sdks that some 3rd party developers had, an of course, those weren´t the final SDK.

"

According to the article, Nintendo had selected NEC Electronics to provide 90-nanometer CMOS-compatible embedded

DRAM technology for the forthcoming Wii console. The new LSI chips with eDRAM will be manufactured on NEC's

300-millimeter production line at Yamagata. While no clock speeds or really juicy info was included in the release,

the news does bring up a variety of interesting points.

There are also other interesting points here: like the mention of MIM2 structure on EDRAM implementations(since

third quarter of 2005) and the revelation that NEC selected MoSys as the DRAM macro design partner for the "Wii

devices," due to the fact that MoSys is quite familiar with implementing 1T-SRAM macros on NEC's eDRAM process.

"

There is also an image indicating the avaible macros from NEC according to the year, so, we can conclude by it that

Wii makes use of the UX6D macro made by NEC with mosys technology.

Now, NEC said 1T-SRAM macro from Mosys right, but by this is not stating the use of the first generation 1T-SRAM

technology, if we want to confirm which one is using we just have to either call Mosys or go through its homepage

and find it ourselves. There was a page named mosys available macros which listed the macros and the shrinking

technology, in which you could see that only 1T-MIM macros were availbale starting from 90nm technology, now the

page has gone since mosys did some changes to it´s homepage and i cannot longer find something like that table they

had prevously, neverthless, you should know that each new technology macro that mosys develops is cheaper, meaning

that using the original 1T-SRAM macro was not an option for wii since it was expensive and not even available since

90nm.

[www.eetimes.com]

"

MoSys Inc. and NEC Electronics officials confirmed Monday they will supply Nintendo Co. Ltd. with 1T-SRAM and eDRAM

technology, respectively, for the Wii game console.

MoSys and Nintendo have worked together for the last six years. Previous generations of MoSys' 1T-SRAM technology

were built into the GameCube. The newest 1T-SRAM technology embedded in the Wii console use NEC Electronics'

90-namometer CMOS-compatible embedded DRAM (eDRAM) process technology.

NEC said it selected MoSys as the DRAM macro design partner for the Wii because the company has experience in

implementing 1T-SRAM macros on NEC's eDRAM process

"

It says the newest 1T-SRAM technology, and as we know, the newest technology from mosys back then was the 1T-SRAM

MIM, which is like 1T-SRAM-Q but using mim, nevertless, that for the case of TSMC partnership, NEC had MIM-2

technology, which nintendo considered to be the best deal.

OK, lest check these:

Nec Electronics MIM2:

1.- [www.eu.necel.com]

2.- [www.necel.com]

3.- [www.necelam.com]

4.- [www.eu.necel.com]

[www.necel.com]

1.- En the first article we can view the Key Technologies for eDRAM Cells table, and we can see that only

UX6D(CB-90)is mentioned at 90nm with 1Mb in 0.22mm2(I did further information and discovered that this size includes

the overhead) which is the same as saying 1 bit per 0.22um2.

Here you can check 1T-SRAM previous technologies: [en.wikipedia.org]. You may be wandering way

there are two sizes like: 1T-SRAM-Q bit cell 0.28mm2 and 1T-SRAM-Q 0.55mm2 with overhead and also that if both can

hold 1Mbit. I found the answer in this other article from a conference that mosys did to talk about the evolution of

their macro designs:

[www.bjic.org.cn]

As you can see, the bit cell must be referring to just the capacitor size(since it only mentions it´s

dimensions) and the macro size must be the whole circuit(due that it mentions it´s dimensions and the Mbits in the

format Xmm2/Mb); but well, this should have been clear in the IGN link report. In

the table with the macro release list starting from 2002 to 2007.

**** wii macro memory revealed *************

UX6D is a macro based on 1T-SRAM-Q at 90nm and by replacing the FAC for NEC´s MIM2 technology. Since the

only kind of eDRAM available at 90nm is UX6D and uses MIM-2(dielectric material is ZrO2) and not MIM(dielectric

material is Ta2O5) from its previous generation at 130nm; what IGN reported it´s true, even if they don´t mention

the use of UX6D as macro design on the wii explicitly(they rather preferred to expose it in the Nec´s macro release

table available in their report).

Don´t forget to read the Material Advantages section in the link, since I have not figure out yet what they

mean by "ZrO2's high dielectric constant"(does this mean that it has almost no refreshing compared to normal SRAM?).

So if both NEC and TSMC use their own technology of MIM structure, and as you saw in the previous articles

that 1T-MIM is a derivate of 1TQ macro(0.55mm2/Mbit) measuring between 0.21mm/Mbit to 0.23mm2/Mb, then UX6D at

0.22mm2 is the full macro that can contain 1Mb; of course that this may change a little depending on which of the

three types of CB-90(for in the UX6D context) is being used and the MHz;

You are still doubting, not to worry, just read this and even an exceptical person will no doubt that all

what I have said until now is fact.: [www.necel.com]

Once you read it you find out that the article is like suggesting what 'vegas' could have, but it uses as

an example the flipper of the GameCube,

it also suggests that it had 24Mbits, but that today´s UX6D could easily replace the half die of the Flipper with

256Mbits(32MB). Flipper´s die was about 110mm2, so in 55mm2 you can store 32MB.

Remember that UX6D is 0.22mm2 for 1MB. Then let´s do the next:

1/0.22 = 4.545454Mb in 1mm2 4.545454/8 = 0.5681MB/mm2

1mm2/x = 32MB/55mm2 = 0.58181MB/mm2

So isn´t 0.58181MB/mm2 = 0.5681MB/mm2 approximately. And We are suggesting 110mm2 die for flipper, but if

in reality was 112mm2, that would give us more aproximisation.

Lets not forget that the die size of the memory is being taking into account that the speed is about 200 to 300mhz,

since I am not sure how much the speed can affect the die size, besides, there is no information about it. Check

CB-90M for more information.

Ok then, now how much die size could 24MBytes of eDRAM occupy?

1mm2 = 0.56181818 MBytes

42.72mm2 = 24MBytes

and for 3MBytes

1mm2 = 0.56181818 MBytes

5.4mm2 = 3MBytes

Ok, remember that those are aproximations, plus, I am not sure if there are just 3MBytes of eDRAM in vegas since ubi

once said that he compared hollywood between an ATI x1400 and x1600 and that wii had 6MBytes, 2 of framebuffer and z

buffer and 4 for texture cache, but thats another story.-

[newsgroups.derkeiler.com]

Ok, that´s about the memory, but wat about things like the usb controller, the ARM9, etc?

the ARM926EJ-S of Hollywood is about 1.45mm2 according to.-

[www.tensilica.com]

As for the usb controllers, sd controllers, etc, I can´t imagine them occupying a lot room, since even ARM7 just

occupy 0.47mm2, I did a little further investigation and ended up conlcuding that Wii hollywood micht have an ATI

Imageon embedded, which is the ARM9+stuff, and that they could occupy from 3 to 5mm2 according to complexity design.

so, let´s say that the starlet+components ocuppy at least 5mm2, lest´s add that the 5.4mm2 of 3MBytes of eDRAM

now, the vegas has 94.5mm2 of die

94.5-10.4 = 84.5mm2

napa is supposed to have the 24MBytes of eDRAM + DSP from what I read in Wiibrew

Well, hope this information will be useful

--- Wii 720p

It is well known that wii can output only 480p right now, but something interesting happened to me while I was

testing the Mplayer CE or the Geexbox, I don´t remember right now which one was, but well, I tried a 720p movie like

DBZ: Broly second coming, and i cleraly saw that the movie was being recognized, but of course the framerate was

extremly low, like 5 to 10 fps, i also noticed that the resolution was a lot clear and that probably was running at

720p, but maybe it was being downscaled, not sure but for downscaling I must say that the image quality was really

good.

Ok, then, the thing is that if the problem with current applications like mplayer ce is that 720p requires to much

power from the cpu, there are ways to leave some of the work to the gpu so that most of the cpu work can be

uffloaded to the gpu, in few words, make use of gpgpu software.

I have read things like gpgpu for h.264 and other thngs.-

[developer.amd.com]).

pdf

If there is a way to use opencl, cuda, ati stream or Floodgate for wii homebrew, it would be interesting how much

better results we sould get from now on, Nvidia right now is working with an adobe flash player that makes use of

gpgpu.

On purpose, as for the wii dolphin emulator, you should know that games like dead psace extraction require a lot of hardware,

like dual core 3 ghz amd athlon, 4 gigabytes of ram, a gpu starting from the HD 2000 series and to put it even

further, the use of opencl for the framerate slowdowns and other things, and even with that the game runs at just 15

to 20 fps, this most be most probably that the game makes use of lot of physics.

The gamecube to be emulated only requires

cpu more than 1 ghz recommended

ati radeon 9500 or superior

256MBytes ram

do you see the clear difference?

I only did the investigation abpout the Wii/gamecube dolphin emulator to show an example that Wii is totally different architecture to Gamecube, I am not promoting it´s use.

It would be interesting to see the code of games like

Zangeki Reginleiv

Monster hunter 3

Resident Evil DarkSide Chronicles

Cursed Mountain

Dead Space Extraction

Just hope that in future proyects we will be making use of stream processing engines like Opencl or CUDA or ATI Stream, the only detail is that it is unknown if Wii GPU will be compatible for example with Opencl, since opencl doesn´t support all video cards even though was intended to run in any GPU that was designed for gpgpu in mind, but some ATI cards and nvidia designed for that are not supported by now.

games like Monster Hunter 3, Resident Evil DarkSide Chronicles, Silent Hill, Cursed Mountain, etc; HDR+AA was not

possible even in the Nvidia 7 series since HDR required a lot of power and coupling it with AA(antialaising) was out of question

until the new architecture that came in the ATI x1000 family showed that it was possible even though these cards

only had 16 pipelines against the 24 pipelines of the Nvidia 7 series. The ATI x1000 were also the first GPU capable

of GPGPU natively.

ATI Radeon x1000 and Nvidia 8800 first gpu´s capable of gpgpu natively.-

according to this report, gpgpu is not feasible in ATI Radeons below the ati x1000 series(R520 and R580)

[news.softpedia.com]

"

Among the GPGPU capable ATI and Nvidia chips there are those of the latter generation like the GeForce 8800 and

Radeon X1000 and HD 2000s, but older chips can too be used but as they are slower don't hold your breath waiting for

results. Unlike the processors that are capable only of a limited degree of parallelism, being able to execute a few

SIMD instructions per clock cycle, the latest graphics chips can process hundreds. This feature is hardware

implemented in all newer video chips and it is related to the fact that such a chip is made up by many identical

processing units like the pixel shading units. For example, ATI's Radeon X1900 chip has 48 such units, while the

GeForce 8800 numbers 128, so their parallel processing capabilities are very high.

"

ATI Radeon x1000 family HDR+Antialaising

[www.xbitlabs.com]

"

HDR: Speed AND Quality

The new generation of ATI’s graphics processors fully supports high dynamic range display modes, known under the

common name HDR.

One HDR mode was already available in RADEON X800 family processors, but game developers didn’t appreciate that

feature much. We also described HDR in detail in our review of the NV40 processor which supported the OpenEXR

standard with 16-bit floating point color representation developed by Industrial Light & Magic (for details see our

article called NVIDIA GeForce 6800 Ultra and GeForce 6800: NV40 Enters the Scene ).

OpenEXR was chosen as a standard widely employed in the cinema industry to create special effects in movies, but PC

game developers remained rather indifferent. The 3D shooter Far Cry long remained the only game to support OpenEXR

and even this game suffered a tremendous performance hit in the HDR mode. Resolutions above 1024x768 were absolutely

unplayable. Moreover, the specifics of the implementation of HDR in NVIDIA’s graphics architecture made it

impossible to use full-screen antialiasing in this mode (on the other hand, FSAA would just result in an even bigger

performance hit). The later released GeForce 7800 GTX, however, had enough speed to allow using OpenEXR with some

comfort, but it still didn’t permit to combine it with FSAA.

ATI Technologies took its previous experience into account when developing the new architecture and the RADEON X1000

acquired widest HDR-related capabilities, with various – and even custom – formats. The RADEON X1000 GPUs also

allows you to use HDR along with full-screen antialiasing. This is of course a big step forward since the NVIDIA

GeForce 6/7, but do the new GPUs have enough performance to ensure a comfortable speed in the new HDR modes? We’ll

only know this after we test them, but at least we know now why the R520 chip, the senior model in ATI’s new GPU

series, came out more complex than the NVIDIA G70. The above-described architectural innovations each required its

own portion of transistors in the die. As a result, the R520 consists of 320 million transistors – the most complex

graphics processor today! – although it has 16 pixel pipelines against the G70’s 24.

"

ATI R520(gpu found in the ATI Radeon x1000 series) first GPU that supported HDR+AA

[en.wikipedia.org]

"

ATI's DirectX 9.0c series of graphics cards, with complete Shader Model 3.0 support. Launched in October 2005, this

series brought a number of enhancements including the floating point render target technology necessary for HDR

rendering with anti-aliasing. Cards released include X1300 - X1950.

"

The ATI Hollywood was finished later than June 2006, when NEC announced that would provide the EDRAM to Wii with

colaboration of Mosys.

Monster Hunter 3 of Wii shows us HDR+AA

[news.vgchartz.com]

"

Monster Hunter 3 demo impressions

By Nick Roussounelos 14th Jun 2009

Nintendo, 3,525 views

We tried out the Monster Hunter 3 demo bundled with the Wii version of Monster Hunter G. Quest Complete!

Monster Hunter 3 (~Tri) Preview

Upon the series debut on PS2 back in 2004 the public reaction to the Monster Hunter series was, at best, mediocre.

Someone, who is unquestionably very happy now, proposed porting Monster Hunter to Sony's portable wonder and here we

are, with MH2G climbining to 3 million and counting! Now, let's take a sneak peek at how things fare for the next

iteration of the franchise, Monster Hunter 3, developed from the ground up on Wii, through the free demo bundled

with the system's own version of Monster Hunter G which was recently released. Before we start, let me make it clear

that I don't speak Japanese, so don't ask for any story coverage.

The demo consists of two quests, hunt either a Kurubekko (little wyvern much like Kut-Ku) or an alpha carnivore,

Dosjagi. You can choose between 5 weapons: Great Sword, Sword & Shield, Hammer, Light Crossbow and Heavy Crossbow.

Make your selections and off you go! The first thing you'll notice is the spectacular graphics; no, that's no

hyperbole. The lighting, the texture detail, anti-aliasing (yes, you read that right, no jaggies), HDR (High dynamic

range rendering for us geeks) and other technologies make this one of the prettiest Wii games on the market, period.

PSP Monster Hunter games looked phenomenal on the platform and pushed the hardware beyond what was thought possible,

Capcom made no compromises this time either. The game also features a great musical score, which is much more epic

in scope than previous iterations. Loading times between each areas take 1-2 seconds tops, nothing disturbing, but

not very pleasant either.

"

There are also other games in Wii that have shown HDR+AA like Cursed Mountain, Resident Evil DarkSide Chronicles,

Silent Hill, and seems that Xenoblade will also too. On purpose, you should also see games like Zangeki no

Reginleiv, whatch gametrailers.com. There is also something you should know, HDR cannot be done without progammable

shadders.

I have also read that HDR is not possible without programmable shaders.-

[courses.csusm.edu]

"

OpenGL fixed - functions cannot be used for HDR.

The programmable pipeline must be used to achieve HDR lighting effects.

HDR is achieved in OpenGL using a fragment shader, written in GLSL or Cg.

"

On purpose, Zelda Twilight did not use HDR, that was a simulating HDR, just compare Zelda to Monster Hunter 3 images and

you will see the difference without difficulty.

I am sure that must developers that say that Wii has no programmable shaders just say that becasue they know Directx

Shader Model, but not OpenGL Shading Language, since nintendo makes use of an API called GX which is very similar to

Opengl, and there have been also succesful attempts of using wrappers like the mesa(opengl) driver.

[www.phoronix.com]

"

A Mesa (OpenGL) Driver For The Nintendo Wii?

Posted by Michael Larabel on January 28, 2009

There is now talk on the Mesa 3D development list about the possibility of having a Mesa driver for the Nintendo

Wii. Those working on developing custom games for this console platform have already experienced some success in

bringing OpenGL to the Wii through the use of Mesa.

Nintendo has its own graphics API (GX) for the Wii, which is resemblant of OpenGL but still different enough that

some work is required to get OpenGL running. A way to handle this though is by having a Mesa driver translate the

OpenGL calls into the Wii's GX API. This is similar to gl2gx, which is an open-source project that serves as a

software wrapper for the Nintendo Wii and GameCube.

Their first OpenGL instructions running on the Nintendo Wii is shown below (it's not much, but at least it's better

than seeing glxgears

[www.phoronix.net]

"

Or perhaps could be because they are making use of the first SDK´s that came out even before the Hollywood´s design

was finished.

There are many engines that support programmable shader for Wii like:

Unity for Wii.- [unity3d.com]

"

Scriptable Shaders

Unity's ShaderLab system has been expanded to unlock the full power of The Wii console's graphics chip. Use one of

the built-in Wii-optimized shaders or write your own. Script and modify at runtime any shader on any objects in any

way you like.

"

Gamebryo LighSpeed for Wii.-

[www.emergent.net]

Gamebryo 2.6 Features:

Full tools capabilities for Nintendo Wii:

Wii Terrain: Emergent Terrain System is now part of Wii, with base terrain/shader support, port of terrain samples

and detail maps in shader.

Display List from tools reduces memory requirements

Improved resource utilization via VAT

Copy / Fast Copy

Mesh profiles

Floodgate – improves performance and reduces risk when porting from multi-core platforms.

Reduces MEM1 memory requirements

Saves memory with exports that use smaller data types

"

AThena engine for Wii(used in Cursed Mountain).- [wii.ign.com]

"

To deliver on these goals, the Sproing team relies on its proprietary "Athena" game engine, which is rendering the

Himalayas on Wii at a quality never seen before. The engine highlights of "Athena" include amongst others,

HDR-Rendering, shader simulations developed especially for Wii in order to display ice, heat and water (realistic

reflections and refractions), an ultra-fast particle system for amazing snow storms, soft particles for realistic

fog and smoke, depth of field, motion blur, dynamic soft shadows, spherical harmonics lighting, as well as a high

performance level-of-detail and streaming system in order to provide long viewing distance of the entire

surrounding. In order to create an exciting atmosphere when battling the ghosts, the game employs a number of

custom-created special effects such as the shader simulations as well as a newly developed post-processing

framework. "Our engine technology really takes the Wii hardware to its limits and Wii gamers can really look forward

to a heart-stopping, and breath-taking world that comes alive with this title," said Gerhard Seiler, Technical

Director of Sproing.

"

Shark 3D for Wii&trade(read about the Re-entrant Modular Shader System™).-

[www.spinor.com]

"

4. Empower your game designer: Live editing

One of Shark 3D’s core features is the tool pipeline, which supports live editing from the bottom up.

Edit your game items and NPCs in the Shark 3D Wii game editor,

edit your game scripts,

edit your textures in Photoshop,

edit your animations and geometry in Max or Maya,

edit your rendering effects in the Shark 3D Wii shader editor, ...

... and see and test all your changes live not only on your PC, but also on your Wii development kit!

Furthermore, supported by Shark 3D's live-live™ editing, developers can easily modify and customize the tool

pipeline for their particular needs. This makes game production for Wii more efficient and predictable,

significantly reducing production times and risks.

5. Empower your artists: Lighting, shadowing, reflections on Wii - easier than ever!

The node based shader editor empowers your designers to create their own Wii shader graphs.

Your designers can easily create, combine and customize lighting, shadowing, reflections, shader animations, color

operations, and many other effects.

Internally, the shader editor automatically compiles the Wii shader graphs into assemblings of Shark 3D renderer

modules and pipeline stages, powered by the Re-entrant Modular Shader

System™(http://www.spinor.com/?select=tech/render#modular_shader_system). Of course, the designers can edit the

shaders interactively both on the Wii and on PC. This is especially useful when fine tuning the look on the Wii.

8. Many useful run-time features included

Run-time features of Shark 3D for Wii include the following:

Rendering

Efficient rendering: Off-line generation and Wii specific optimization of Wii display lists and Wii vertex data.

Efficient skinning: Off-line assembling of skinned meshes specifically for the Wii. At run-time, the CPU does not

touch any vertex, index or polygon data anymore.

Wii specific off-line conversion of textures for optimal performance and minimal memory usage.

A multi-layer animation system.

A character walking animation system.

Efficient culling of static and movable objects for rendering.

A shader system providing access to Wii specific render state settings.

A particle system.

Dynamic local lights.

Lighting management respecting Wii specific aspects.

GUI rendering.

TrueType Unicode text rendering.

Smart management of object state dependencies using delayed evaluation.

Smart attachment point management.

Multiple viewports with separate culling for multiplayer games.

And many other features.

Sound

Optimized Wii specific sound formats.

Run-time support for compressed Ogg Vorbis files.

Efficient culling of static and movable sound sources placed in the level.

Streaming sound (e.g. background music) from disk.

Wii specific off-line optimization and conversion of sounds for optimal performance and minimal memory usage.

Various other sound engine features.

Physics

Collision detection.

Physics.

Automatically generated sound for physics collisions and friction.

Automatic merging of nearby contact sounds.

Various constraints.

Generic path-based motors.

Various other physics features.

Game engine

Triggers and sensors.

Scripting.

Level management.

Many other game engine features.

Streaming

Background loading of levels without halting the rendering loop.

Background loading of levels parts and asset groups without halting the rendering loop.

Resource and memory management

Pack files.

Optimized low-level memory management.

Optimized for avoiding implicit memory allocations while a level is running. This avoids unexpected out-of-memory

errors while a level is running.

Many other features.

"

Another proof that ATI Hollywood was designed for GPGPU is Havok FX

Wii Havok FX

[www.gamasutra.com]

"

Product: Havok Supports Wii, Next-Gen At E3

by Jason Dobson

0 comments Share

May 15, 2006

During last week's E3 event in Los Angeles, cross-platform middleware physics solution provider Havok announced

support for the Wii, Nintendo's upcoming next-generation video game console platform.

The GameCube's current library of software titles feature more than fifteen games that utilize Havok middleware, and

this announcement confirms the continued support of Nintendo by Havok.

"Havok has become synonymous with state-of-the-art physics in games in recent years," said Ramin Ravanpey, Director

of Software Development Support, Nintendo of America. "With this announcement from Havok, we feel Wii developers

have another critical tool in their hands that helps unleash the real magic of the Wii platform."

In addition, Havok's software solutions were featured in 35 titles from 25 different developers at E3 across

multiple platforms, including Alan Wake, Alone in the Dark, Assassin, Auto Assault, BioShock, Brothers in Arms

Hell's Highway, Cars, Company of Heroes, Crackdown, Dawn of Mana, Dead Rising, Destroy All Humans! 2, F.E.A.R.,

Ghost Recon Advanced Warfighter, Happy Feet, Heavenly Sword, Hellgate: London, Just Cause, Killzone, Lost Planet:

Extreme Condition, Metal of Honor Allied Assault, Motor Storm, NBA Live 07, Over the Hedge, Saint's Row, Shadowrun,

Sonic The Hedgehog, Splinter Cell 4, Spore, Stranglehold, Superman Returns: The Videogame, Test Drive Unlimited, The

Ant Bully, The Godfather, and Urban Chaos: Riot Response.

"We wanted the action in our game to focus on interactive elements in a highly intuitive manner," says David Nadal,

Game Director at Eden Games. "We knew Havok Physics could help us do that for game-play elements, but we wanted to

push the envelope even further to add persistent effects that could interact with game-play elements. Havok's

GPU-accelerated physics effects middleware helped us achieve that in surprisingly little time."

Through the use of Havok FX and GPU technology, game developers are able to implement a range of physical effects

like debris, smoke, and fluids that add detail and believability to Havok’s physics system. Havok FX is

cross-platform, takes advantage of current and next-generation GPU technology, and utilizes the native power of

Shader Model 3 class graphics cards to deliver effect physics that integrate seamlessly with Havok’s physics

technology found in Havok Complete.

“With Havok FX we can explore new types of visual effects that add realism into Hellgate: London,” commented Tyler

Thompson, Technical Director, Flagship Studios. “Given the widespread installed base of GPUs and the incredible

performance of the new Nvidia GeForce 7900 boards, Havok FX was a natural choice."

"

And as you may know, Havok FX is designed only for physics in the gpu, while Havok Hydracore is the one designed for

cpu multicore.

Still doubts that Havok FX has relation with gpgpu, don´t worry.

[www.firingsquad.com]

"

..

FiringSquad: One of Havok's competitors' , AGEIA, has said of the ATI-Havok FX hardware set up, "Graphics processors

are designed for graphics. Physics is an entirely different environment. Why would you sacrifice graphics

performance for questionable physics? You’ll be hard pressed to find game developers who don’t want to use all the

graphics power they can get, thus leaving very little for anything else in that chip. " What is Havok's response to

this?

Jeff Yates: Well, I’m sure the AGEIA folks have heard about General Purpose GPU or “GP-GPU” initiatives that have

been around for years. The evolution of the GPU and the programmable shader technology that drives it have been

leading to this moment for quite some time. From our perspective, the time has arrived, and things are never going

to go backwards. So, if people are going to purchase extra hardware to do physics, why not purchase an extra GPU, or

better yet relegate last year’s GPU to physics, and get a brand new GPU for rendering? The fact is that this is not

stealing from the graphics – rather it gives the option of providing more horsepower to the graphics, or the

physics, or both – depending on what a particular game needs. I fail to see how that’s a bad thing. Not to mention

that downward pricing for “last year’s” GPUs are already feeding the market with physics-capable GPUs at the sub

$200 price point –even reaching the magic $100 price point.

FiringSquad: Finally is there anything else you want to say at this time about Havok and its plans?

Jeff Yates: Well we have so much good stuff coming – Havok FX is very exciting, but we are also preparing to launch

our latest character behavior tool and sdk: Havok Behavior. This is really the next step forward for blending

physics, animation, and behaviors, all with a configurable tool set that supports direct export and editing of

content from 3ds max, Maya, and XSI. And I forgot to mention next generation optimizations and Nintendo Wii support.

It is a lot, but it is all part of our big 4.0 release coming this summer. It is definitively an exciting time for

us.

"

Nintendo may not make use of shader model 3 or superior for Wii, since is a propiestary of Windows, but they can use

something like the shading language of opengl, for example, shading language version 1.3 = shader model 4.0.

[www.opengl.org]

I can compare the ATI Hollywood between an ATI Rv610 and an Rv630 since Hollywood, besides being capable of HDR+AA

like the ATI R520, according to one of the displacement mapping patents of nintendo(valid until 2020) says that the

GPU vertex cache and is able of doing fetching vertex texture, something available until the ATI HD 2000 series(ATI

R600 GPU´s) came up and those features are necessary for vertex displacement mapping, which does almost all the work

in the GPU for the displacement leaving the CPU to just provide the original position of the vertices as work(which

is nothin); in few words, this technique makes use of GPGPU algorithms, plus, ATI R600 gpu´s also were the first to

introduce a command processor.

In it I read something about fetching vertex and vertex cache, something that was available until the ATI´s HD 2000

series(ATI R600) for doing vertex displacement mapping.

Patent Storm

[www.patentstorm.us]

"

US Patent 6980218 - Method and apparatus for efficient generation of texture coordinate displacements for

implementing emboss-style bump mapping in a graphics rendering system

US Patent Issued on December 27, 2005

Estimated Patent Expiration Date: November 28, 2020

Estimated Expiration Date is calculated based on simple USPTO term provisions. It does not account for terminal

disclaimers, term adjustments, failure to pay maintenance fees, or other factors which might affect the term of a

patent.

Command processor 200 receives display commands from main processor 110 and parses them—obtaining any additional

data necessary to process them from shared memory 112. The command processor 200 provides a stream of vertex

commands to graphics pipeline 180 for 2D and/or 3D processing and rendering. Graphics pipeline 180 generates images

based on these commands. The resulting image information may be transferred to main memory 112 for access by display

controller/video interface unit 164—which displays the frame buffer output of pipeline 180 on display 56.

FIG. 5 is a logical flow diagram of graphics processor 154. Main processor 110 may store graphics command streams

210, display lists 212 and vertex arrays 214 in main memory 112, and pass pointers to command processor 200 via bus

interface 150. The main processor 110 stores graphics commands in one or more graphics first-in-first-out (FIFO)

buffers 210 it allocates in main memory 110. The command processor 200 fetches: command streams from main memory 112

via an on-chip FIFO memory buffer 216 that receives and buffers the graphics commands for synchronization/flow

control and load balancing, display lists 212 from main memory 112 via an on-chip call FIFO memory buffer 218, and

vertex attributes from the command stream and/or from vertex arrays 214 in main memory 112 via a vertex cache 220.

Command processor 200 performs command processing operations 200a that convert attribute types to floating point

format, and pass the resulting complete vertex polygon data to graphics pipeline 180 for rendering/rasterization. A

programmable memory arbitration circuitry 130 (see FIG. 4) arbitrates access to shared main memory 112 between

graphics pipeline 180, command processor 200 and display controller/video interface unit 164.

FIG. 4 shows that graphics pipeline 180 may include: a transform unit 300, a setup/rasterizer 400, a texture unit

500, a texture environment unit 600, and a pixel engine 700.

Transform unit 300 performs a variety of 2D and 3D transform and other operations 300a (see FIG. 5). Transform unit

300 may include one or more matrix memories 300b for storing matrices used in transformation processing 300a.

Transform unit 300 transforms incoming geometry per vertex from object space to screen space; and transforms